To go wrong in one’s own way is better than to go right in someone else’s. In the first case you are a man, in the second you’re no better than a bird.

-Fyodor Dostoevsky

Intelligence vs Obedience

No one doubts that generative AI is improving at a rapid pace. Improvements in language models break down into two broad categories. Gains in general intelligence, and gains in model pliability.

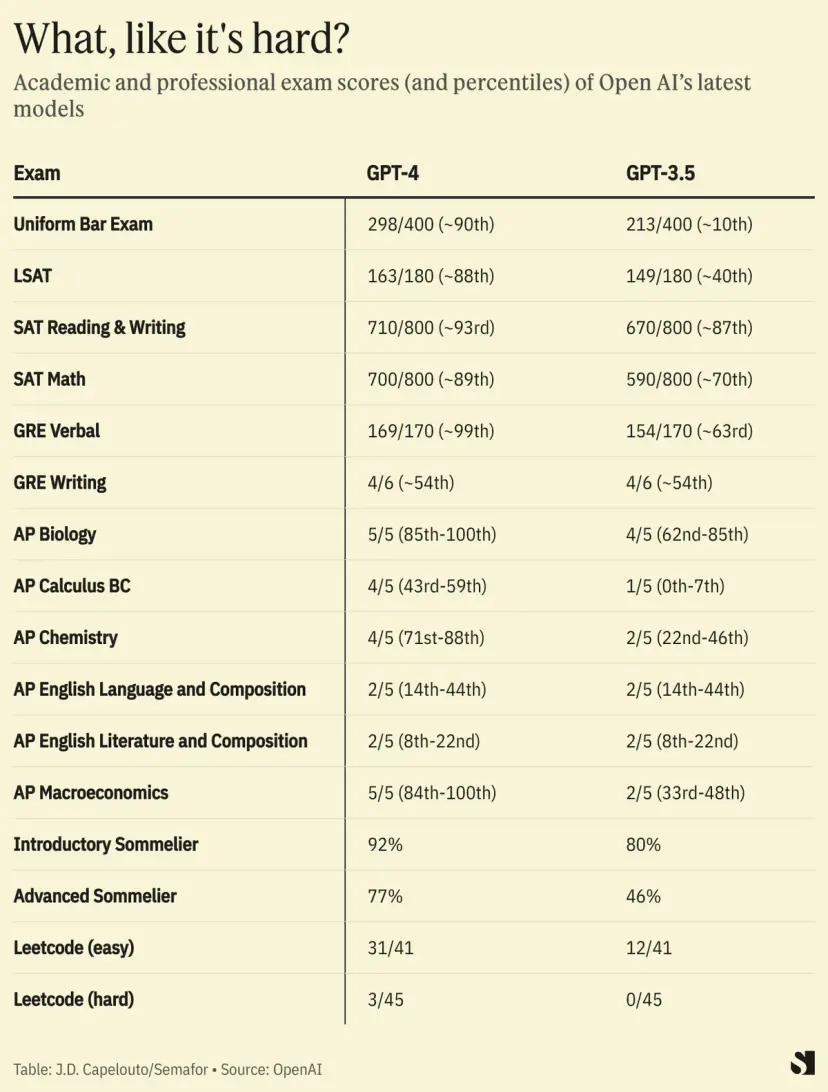

Most of the standard tests we perform on generative models evaluate the first component. Whether we’re tracking a model’s performance on the GRE, or if it can recall specific facts without hallucinations, we’re assessing the generic concept of intelligence as a raw commodity.

Model pliability is often overlooked. In a world where open source models are proliferating, many believe in a future populated by thousands (or perhaps millions) of custom tuned models. These models will be trained at their very core on piles of data we provide them. Ten thousand unique examples is presently the absolute minimum to start affecting this part of the model’s architecture.

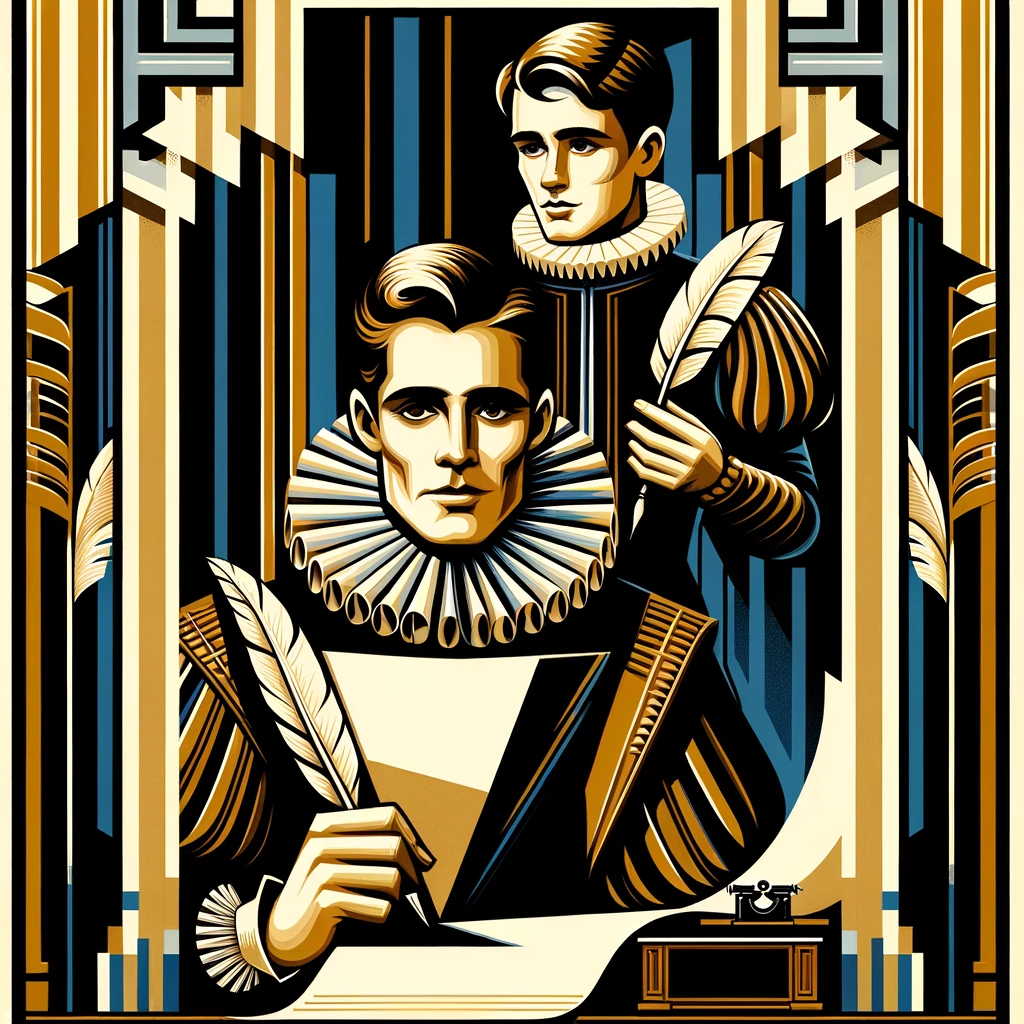

Shakespeare, across all his written work, produced a little under 900,000 words. If we use 300 word examples of his writing, that corresponds to only 3,000 unique chunks of content to feed the model. Of course there are ways to expand that corpus, both using similar human generated content, and artificially generated variations, but the point remains that even the most prolific individual writers provide scant material to train models on.

The solution is optimizing for model pliability. Train a model on everything you can find, but when it comes time to generate, optimize for complete deference to the user’s prompt. This provides the benefit of a broad base of knowledge for general human understanding, while allowing for laser focused specificity in outputs. Training a custom model that locks you into a certain set of behaviors can seem right for certain applications. But when it comes to embedding human expertise in AI, the one constant is change. Expectations are constantly changing, and as they do, your models must adapt.

You are the Golden Goose

It is wrong to think that raw data is the most valuable asset in providing a valuable, differentiated generative experience. We see this type of thinking everywhere, even McKinsey is onboard the data train. Your accumulated works are valuable, yes. They are a brilliant reflection of your expertise, your insights, your creativity, and your identity. One might even say they’re worth their weight in gold.

But that makes you the golden goose. Your process for producing content is unequivocally absent from your content itself. And throwing that content to a training process expecting results is like reading a few Shakespeare plays and expecting to become a world famous playwright.

On the other hand, what if you could apprentice yourself to Shakespeare? What if you worked under him for years, watched him write, even had a few long drunken nights discussing his deepest thoughts? It’s not certain you’d become the most famous playwright in the world, but it’s credible that you’d be a contender.

In generative AI, prompting is the epitome of process transparency. When you can see clearly, in natural language, the frameworks guiding generation, you kick off a virtuous cycle improving clarity into your own creative process.

You don’t start with the answers, you find them along the way…

Iterative prompt design not only allows us to embed expertise into a model, it allows us to discover for the first time the processes we ourselves are engaging with to create. Processes we may not fully understand at the outset.

In iterative prompt design, you start with a blank slate. You shape your tool slowly, using example questions and user feedback as your guide. As your tool encounters new situations, you augment the prompt, providing increasingly more nuance.

Over time, your prompt starts to become a more thoughtful outline of your process than you’ve ever had. And the best part is, you’re conscious of the entire experience. A prompt is the densest possible surface area of control, and that control is completely transparent to you.

Even players that demonstrably have the resources to train custom models for every little task opt to use general purpose models with complex prompt design. OpenAI notoriously uses elaborate prompts at various stages.

AI vs Creativity

Generative AI is only a creativity killer for those that lack the ability to engage with it creatively. We’ve already thrown pretty much all the written data humanity has ever produced at these models. Now, it’s time to stand alongside them and move forward together. As more people engage with the process of iterative prompt design, they will unlock components of their creativity they never realized they had.

A joke I heard recently from an OpenAI employee goes a little like this. “They say OpenAI is going to take everyone’s job. We’ll do that just as soon as we can hire someone to tell us what all the jobs are.” It turns out that there isn’t a finite list of all jobs. The process of telling generative AI what all the jobs are will never be complete.

A new type of economy is emerging that we call the GPT economy. All that means is that going forward, we will not only be responsible for our creations, we will also be responsible for our processes. This world will be driven by self aware humans shaping the behavior of pliable general purpose models with prompts that reflect those processes.

To take the first step towards this future, build a tool for yourself. It takes 5 minutes. Share it with others, improve it, and you’ll be well on your way.